The proposed regulation of AI systems: what you need to know |

Authors/contacts: Karim Derrick, Tom Gummer.

The European Commission has recently published a landmark proposal for a legal framework that will regulate, and even ban, certain AI systems.

The EU has a history of standing up to big-tech technology companies, including recent proposals to break up big technology companies for repeated anti-competitive behaviour under the proposed Digital Markets Act, as well as data use restrictions imposed by GDPR. The proposed AI systems regulatory framework is arguably another step in that direction.

Companies that are developing or using AI systems will be looking closely at the proposals, including insurers and law firms. GDPR has also set a precedent, when the EU acts to regulate technology it creates a gold standard that the rest of the world has tended to fall in line with. And as with the GDPR, these new regulations are to be extraterritorial in scope. Irrespective of location, if you’re providing AI systems to the EU and have EU citizens as customers you will be subject to the regulations.

At the heart of the proposal is the Commission’s view that “AI should be a tool for people and be a force for good in society with the ultimate aim of increasing human well-being” (1).

The framework gives this view teeth by:

- Prohibiting certain AI systems outright; and

- Categorising others as high risk, subjecting those who use or produce them to specific regulatory requirements which must be adhered to.

And, the penalty for non-compliance? A range of fines; up to 30M Euros or 6% of total worldwide annual turnover for the worst breaches. An uplift of 50% on fines already in place for breaches of GDPR.

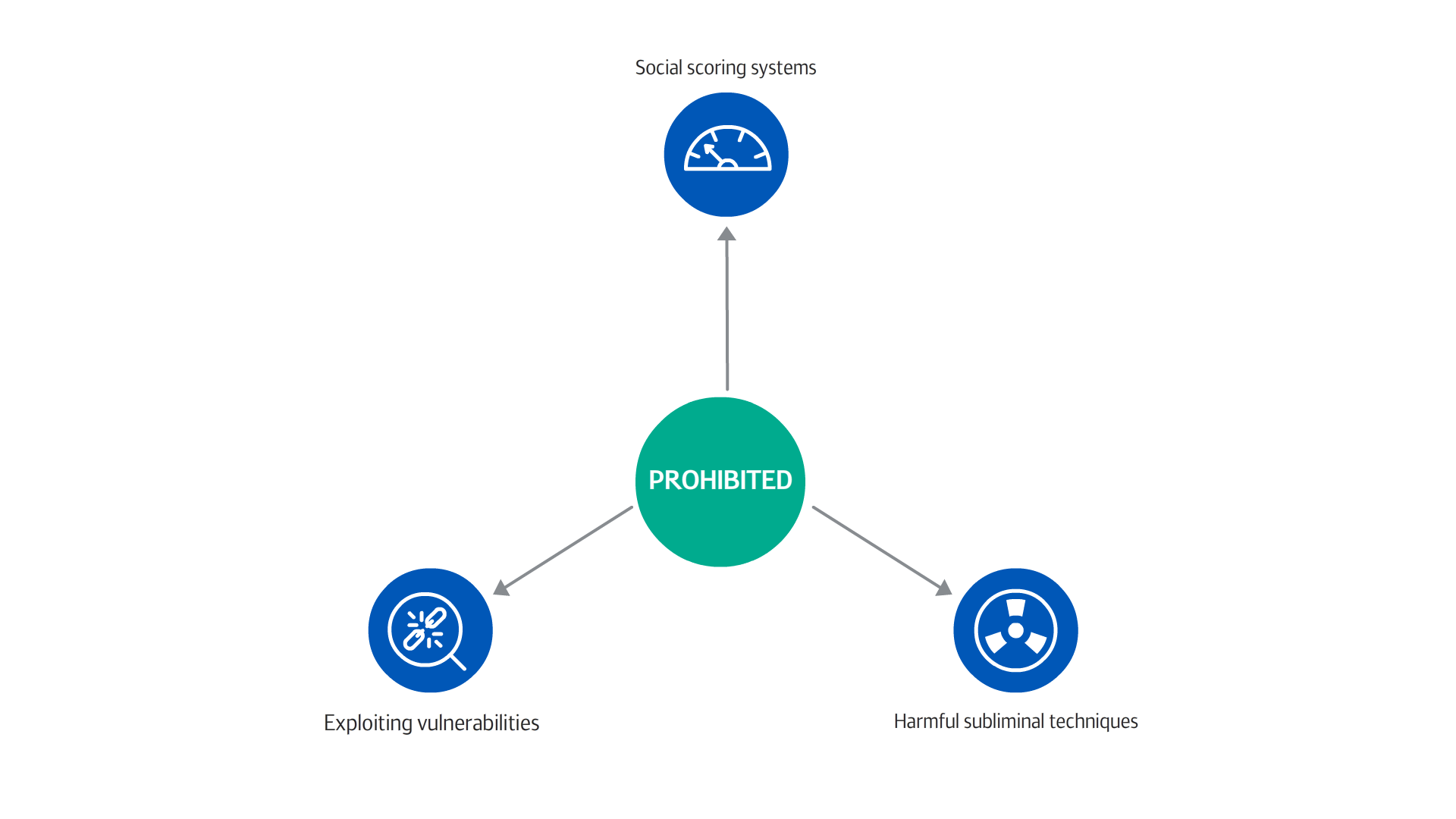

Prohibited AI systems

Systems that perpetuate particularly harmful AI practices contravening Union values are to be prohibited. This includes AI systems:

1. Used by public bodies to classify the trustworthiness of persons based on social behaviour or personality with social scoring leading to detrimental treatment. Effectively a ban on social credit scoring systems.

2. That deploy subliminal techniques beyond consciousness to cause harm – an example might be a toy that uses voice assistance to manipulate a child into doing something dangerous (2).

3. That exploit vulnerabilities of specific groups due to age, physical or mental disability to affect their behaviour and cause harm. For example, assistance devices which remind dementia sufferers to take medication, but at the same time promote spending on unnecessary drugs.

High risk AI systems

What is perhaps more contentious is the extent to which AI systems have been categorised as high risk (see our diagram for a full list). These include AI systems used:

1. To determine an individual’s access to essential services. For example, who should be worthy of state welfare or private credit.

2. To assist a judicial authority in researching and interpreting facts and then in applying the law to those facts.

3. For recruitment and work management purposes. For example, filtering job applications or allocating work tasks amongst employees.

4. For educational purposes, for example assessing participants in tests.

Such systems may only be permitted for use subject to compliance with mandatory requirements that include registering the code, providing detailed technical documentation, and being subject to audits.

Public authorities will have the power to intervene to stop their use under certain circumstances.

Could this evolve to affect the use of AI within legal and insurance?

The proposal grants the Commission the power to add to the list of systems that are described as high risk. It is likely to be some years before the proposal becomes binding legislation, if indeed it does. There is also already pressure from digital rights groups to strengthen the framework that could perhaps lead to new categories being added or existing categories being broadened.

Could the definition of ‘judicial authority’ be stretched or defined to include law firms or even insurers who create or use AI to interpret claim facts and apply the law to them to reach an outcome? Could claim analytics and the use of AI to determine legal liability and coverage decisions or a level of damages to be offered be determined high risk?

Might AI systems which assist in determining whether an individual should be granted access to a certain insurance product or claim payout be caught if ‘private services’ are defined to include insurance? There is a long way to go before these proposals are finalised. The outline proposals are though a major milestone in what has been an inevitable reigning in of the freedoms enjoyed by technology companies. The whole world is watching with interest.

(2) https://ec.europa.eu/commission/presscorner/detail/en/SPEECH_21_1866

Related news and insights

Artificial Intelligence: exploring risks and rewards

Karim Derrick of Kennedys IQ explores the risks and rewards as the sector looks to an AI future.

Assessing the impact of the 17th Edition of the JCG on OIC Claims

The highly anticipated 17th Edition of the Judicial College Guidelines (JCG) has begun to reach recipients. Let’s delve into the standout updates from this edition and examine their potential implications for Official Injury Claim (OIC) claims.

Award winning Kennedys IQ

Last week, we had the honour of attending the prestigious ‘British Legal Technology Awards 2023’ as an award finalist.

Award winning Kennedys IQ

Last week, we had the honour of attending the prestigious ‘British Legal Technology Awards 2023’ as an award finalist.

Kennedys IQ on using AI for ‘highly complex’ claims

Karim Derrick, chief products officer at Kennedys IQ, shares how the company is using artificial intelligence for complex claims documents.

Kennedys becomes first law firm to join prestigious US fintech research group

Kennedys IQ, through our global law firm Kennedys, has joined the prestigious US-based Center for Research toward Advancing Financial Technologies (CRAFT) as it cements its position as one of the world’s most innovative law firms.